LLM App Development in a Nutshell

A Beginners Guide to Building Efficient and Customized Large Language Model Solutions for Diverse Applications

Building AI applications has never been easier, thanks to the revolutionary invention of transformers. First presented in a paper called “Attention Is All You Need” by Vaswani et al. in 2017, this breakthrough has led to the development of Large Language Models (LLMs), which can understand and generate text across diverse contexts and domains.

The rise of highly advanced LLMs, such as OpenAI’s GPT series, Google’s BERT and Meta’s Llama, have enabled capabilities previously thought unattainable.

Since the unprecedented adoption of OpenAI’s ChatGPT, leveraging pretrained LLMs to build custom solutions has become incredibly popular. These models have opened new horizons for software engineers, machine learning practitioners, and businesses, enabling the creation of innovative applications that can automate customer support, generate content, summarize information, and much more.

In this guide, we’ll explore the essentials of LLM development, focusing on how you can harness the power of pretrained LLMs and customize them to meet your specific needs. Whether you are a software engineer, a machine learning enthusiast, or someone keen on the latest advancements in AI, this guide will provide you with the foundational knowledge and practical insights to excel in this rapidly evolving field.

If you’re eager to dive deeper and gain hands-on experience in developing a LLM solution yourself. I invite you to join my upcoming Coursera course. A key highlight of the course is building an LLM app that interacts with the original LLama2 paper published by Meta, allowing you to create your own powerful learning buddy, trained to answer all your questions on LLama2.

Don’t miss this opportunity to become an early adopter and expert in this exciting field.

Today’s LLM Development Landscape

The landscape of Large Language Model (LLM) development today is dynamic and well-supported by a variety of tools and platforms across the entire development lifecycle. Powerful hardware from NVIDIA, AMD, Intel, and specialized AI hardware providers like Cerebras and Graphcore enable efficient training, often managed through GPU cloud platforms like AWS, Google Cloud, and CoreWeave. Essential software backbones such as PyTorch, TensorFlow, and NVIDIA CUDA facilitate model building and training.

In the pre-training and fine-tuning stages, open-source platforms like Hugging Face, Stability.ai, and MosaicML, alongside closed-source solutions from industry leaders like OpenAI, Google, and DeepMind, provide robust tools for refining models. Deployment is streamlined by services such as Bentoml, Vellum, and Banana, ensuring seamless integration into production environments.

Tools like Fiddler, Arize, and WhyLabs offer observability and monitoring capabilities, allowing developers to continuously track and optimize model performance. Governance and security are reinforced by platforms such as Robust Intelligence, Guardrails.ai, and Credo AI, ensuring ethical use and compliance.

Overall, the LLM development ecosystem is well-equipped to support end-to-end model development, making it an exciting and accessible field for software engineers and AI practitioners.

LLM Applications Across Industries

The versatility of LLMs makes them truly unique among the ranks for latest technology trends, this is driven by their inherent ability to understand and generate human-like text across different contexts and domains, making them invaluable tools for organizations aiming to streamline operations and improve user engagement.

Here are some key areas where LLMs are making a substantial impact:

Q&A Systems

LLMs can be implemented to create interactive Q&A systems, used in e.g. educational platforms use cases. These systems allow users to ask questions about their course material and receive instant academic support, thereby enhancing the learning experience. The immediate feedback and personalized assistance that LLMs offer can make learning more engaging and effective for students at all levels.

Summarization Apps

Summarization apps are another key area where LLMs can make a substantial impact. News aggregators can utilize LLMs to summarize daily news articles, offering readers concise and comprehensive overviews that save time while keeping them informed. For researchers, LLMs can create tools that condense lengthy academic papers into digestible summaries, aiding them in quickly grasping key points and advancing their work without wading through voluminous texts.

Customer Support Automation

Customer support automation is a field ripe for LLM integration. Intelligent chatbots powered by LLMs can handle customer inquiries, provide product information, and assist with troubleshooting. This ensures 24/7 support and enhances customer satisfaction by providing quick and accurate responses. Additionally, email response automation can leverage LLMs to generate responses to common customer queries, reducing the workload on support teams and speeding up response times.

Having explored the diverse applications of LLMs, it’s crucial to understand the underlying mechanisms that make these models so powerful and versatile. In the next section, we will delve into a couple of fundamental concepts required to understand the inner workings of LLMs. So let’s take a closer look at how LLMs are built and what makes them tick.

Required Expertise to build LLM Applications

Training LLMs and building Applications around it require expertise in machine learning, natural language processing, and software engineering. Most of us are domain experts in one of the fields, which makes grasping how LLM App development works end to end a stretch for everyone new to the field.

Here is a couple of highly relevant concepts from all of these areas you should be familiar with:

Software Engineering (SWE)

- APIs and Cloud Services: Methods for deploying and serving models to make them accessible for real-time applications.

- Performance Monitoring: Continuously tracking the model’s performance to ensure it remains accurate and efficient.

- Data Augmentation: Techniques to increase the diversity of training data without actually collecting new data.

Machine Learning (ML)

- Transformers: The core architecture used in most LLMs, enabling efficient handling of long-range dependencies in text.

- Fine-Tuning: The process of adapting a pre-trained model to a specific task or domain using additional training data.

- Quantization and Pruning: Techniques to optimize model inference by reducing the size and complexity of the model.

Natural Language Processing (NLP)

- Self-Attention Mechanism: Allows the model to weigh the importance of different words in a sentence, enhancing context understanding.

- Embeddings: Represent words or tokens in continuous vector spaces, capturing semantic relationships.

- Tokenization: The process of converting text into tokens that the model can process.

By understanding these key technologies and concepts, you can better grasp the end-to-end process of developing and deploying LLM applications. Armed with this foundational knowledge, let’s delve into the next section to explore how LLMs are built from scatch.

How do LLMs work end to end?

While building an LLM from scratch is truly a challenging task and would exceed the scope of this article, it is definitely valuable to understand the process, as we will have plenty of touching points when making decisions while working with open source pretrained models.

1. Data Collection and Preprocessing

The foundation of an LLM is the data it is trained on. The process begins with collecting a large and diverse dataset, which can include text from books, articles, websites, and other textual sources. This data must be cleaned and preprocessed to ensure quality. Tokenization breaks down the text into smaller units called tokens (words, subwords, or characters) that the model can process. Cleaning removes noise and irrelevant content, improving the quality of the training data. Normalization converts the text to a consistent format, such as lowercasing and removing special characters.

2. Model Architecture

The architecture of an LLM is typically based on the Transformer model, which has revolutionized natural language processing (NLP) due to its efficiency and scalability.

Transformers convert text tokens into dense vectors, known as embeddings, which represent their meanings in a continuous vector space. The attention mechanism within transformers allows the model to focus on different parts of the input text, assigning varying levels of importance to each token. Self-attention, a key feature, enables the model to consider the entire context of a word within the sentence. Transformers are composed of multiple layers of attention and feed-forward networks, allowing the model to capture complex patterns and relationships in the data.

3. Training

Training an LLM involves feeding the processed data into the model and adjusting its parameters to minimize the prediction error.

The model first processes the input data to generate predictions. It then compares these predictions to the actual data to identify errors. By analyzing these errors, the model adjusts its internal parameters to improve future predictions. This iterative process of error correction and parameter adjustment continues until the model achieves a satisfactory level of accuracy.

4. Deployment

Once trained, the model is deployed to a production environment where it can be accessed by applications. Deployment considerations include optimizing the model for inference to ensure it runs efficiently in real-time scenarios. Techniques like quantization and pruning are used to reduce the model’s size and improve its speed without sacrificing accuracy. The model is then integrated into applications through APIs, cloud services, or edge devices, making it accessible for real-time use. This step ensures that the model can deliver its capabilities to end-users effectively and efficiently.

Understanding the process of building an LLM from scratch provides valuable insights into the complexity and resource demands involved. However, a more practical and efficient approach for many applications is to leverage pretrained LLMs. This method allows you to utilize the robust capabilities of existing models while tailoring them to meet specific needs and use cases.

In the following section, we will explore how to build on top of pretrained LLMs, utilizing their robust capabilities while tailoring them to meet specific needs and use cases.

How to build on Top of pretrained LLMs?

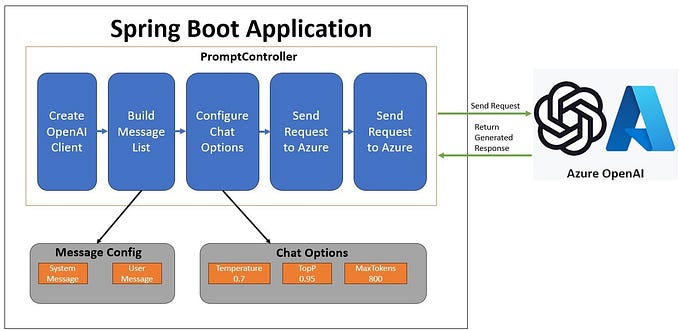

To build on top of pretrained LLMs, the primary task involves integrating these models into your application framework. This process starts by enabling user queries to be processed by the model and ensuring that the responses are accurately handled and displayed. That means the development process is basically back to integrating the LLM like an service via API.

While the foundational model provides a broad understanding, often its outputs need refinement to meet specific application needs. For example, a generic LLM might provide a good general explanation of a medical condition, but a healthcare application may require more precise, jargon-specific responses tailored to medical professionals. To achieve this, customization techniques are applied to enhance its performance in targeted areas.

I want to introduce you to three primary techniques: Prompt engineering, Retrieval Augmented Generation (RAG) and finetuning.

It’s important to understand that these techniques not only have an increasing potential to enhance performance but also vary in complexity and cost to implement. They also require different areas of expertise. Nevertheless, all of them are still less expensive than creating a model from scratch.

Prompt Engineering

Prompt engineering is the simplest and least costly technique, suitable for users without a technical background. By carefully designing the inputs to the LLM, you can guide the model to produce more relevant and accurate outputs. For instance, by providing context or examples within the prompt, you can significantly improve the quality of the response. This approach is especially useful for generating creative content, customer service interactions, or any application where the input-output relationship can be explicitly defined.

Retrieval-Augmented Generation (RAG)

In RAG, the LLM is augmented with a retrieval mechanism that searches a database or external corpus for information relevant to the query. This is particularly useful for applications requiring up-to-date or domain-specific knowledge that the LLM may not have been trained on extensively.

For example, a customer support bot could use RAG to pull in the latest product manuals or troubleshooting guides from a company’s database, providing precise answers that are directly relevant to the user’s question. Implementing RAG requires software engineering skills to integrate the retrieval system with the LLM.

Fine-tuning

Fine-tuning is the most complex and resource-intensive customization technique. It involves taking a pre-trained model and continuing its training on a new dataset that is specific to your use case. This process adjusts the model’s parameters to better fit the new data, resulting in improved performance for specialized tasks.

For example, fine-tuning an LLM on medical literature can make it proficient in understanding and generating text related to healthcare. This technique requires expertise in machine learning, access to appropriate datasets, and significant computational power to perform the training. Despite the complexity, fine-tuning can provide significant performance improvements, making it ideal for high-stakes applications where precision is critical.

Conclusion

Understanding the nature of LLMs is crucial to enter this complex but fascinating new category of software solutions. Choosing pretrained LLMs is a great starting point for most, as it allows you to leverage existing models’ capabilities while focusing on customization to suit specific needs.

By selecting the right customization technique — whether it’s prompt engineering, Retrieval-Augmented Generation (RAG), or fine-tuning — you can significantly enhance the performance and relevance of your AI solutions. Each method offers unique benefits and requires different levels of expertise, enabling you to tailor your approach based on your project’s requirements.

If you’re eager to dive deeper and gain hands-on experience in developing both RAG and fine-tuned solutions, I invite you to join my upcoming Coursera course. This course will provide you with the knowledge and skills needed to design, develop, and optimize advanced LLM applications using the latest tech stack. A key highlight of the course is building an app that interacts with the original LLama2 paper published by Meta, allowing you to create your own powerful learning buddy, trained to answer all your questions on LLama2.

Don’t miss this opportunity to become an early adopter and expert in this exciting field. Sign up for the waiting list now:

By the end of the course, you’ll be equipped to tackle complex LLM projects and drive innovation in your career.